We are deep in the deployment phase of Generative AI.

According to the 2025 Google DORA report, 90% of software professionals now incorporate AI into their daily workflows. The hype cycle is officially over. This is now non-negotiable baseline tooling.

The initial promise was exponential efficiency: AI would handle the heavy lifting, freeing us for high-value engineering.

But if you look at actual telemetry from the field, not vendor marketing brochures, but hard data from mature organizations, the dashboard is flashing red.

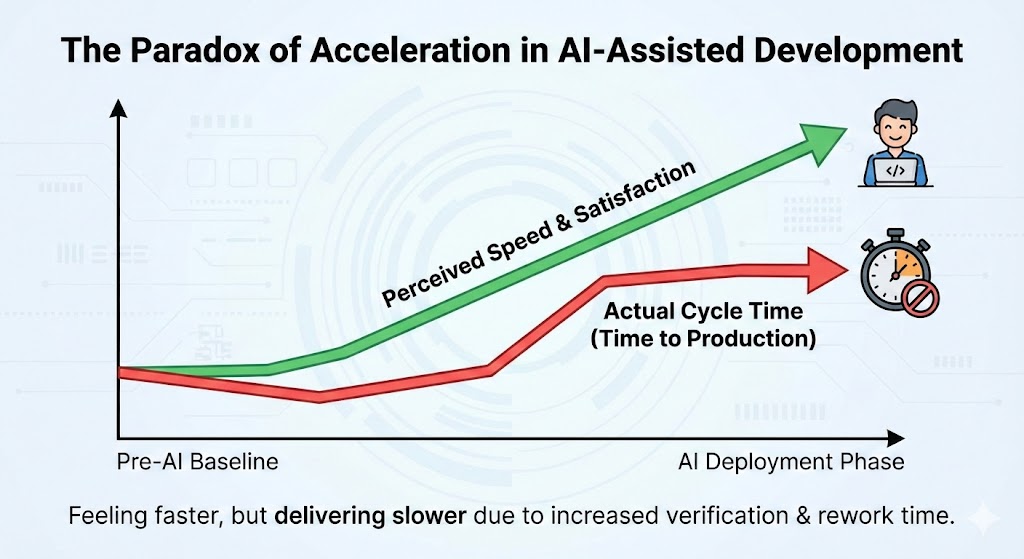

We are witnessing a profound Paradox of Acceleration. Developers report feeling in the flow, with perceived productivity boosts up to 55% in controlled environments. Yet, objective telemetry indicates that cycle times, the time from first commit to actual production deployment, have stagnated.

As engineering leaders, we must stop managing by the “vibe” of instant generation and start managing by the physics of your delivery lifecycle. The law of gravity applies to software: Code is Mass.

AI tools allow your team to generate mass at an industrial scale. But unless you have exponentially increased your structural integrity, your automated testing coverage, your review bandwidth, your observability, you are simply building a heavier tower on the same cracking foundation.

Gravity doesn’t care about your roadmap or your quarterly goals. If the load exceeds the capacity, the collapse is not a possibility, it is a mathematical certainty.

The “Vibe Coding” Trap: Shifting the Bottleneck

There is a massive disconnect between the feeling of speed and the reality of engineering outcomes.

Early studies on “greenfield” tasks showed massive gains. This created a dopamine feedback loop. Developers feel productive because the agonizing pause of syntax recall is gone. But professional engineering is rarely greenfield. It is mostly “brownfield”, navigating complex, existing dependency trees.

When we look at data for real-world maintenance tasks, the narrative flips. A 2025 study found AI-equipped developers took 19% longer to complete complex modification tasks compared to control groups.

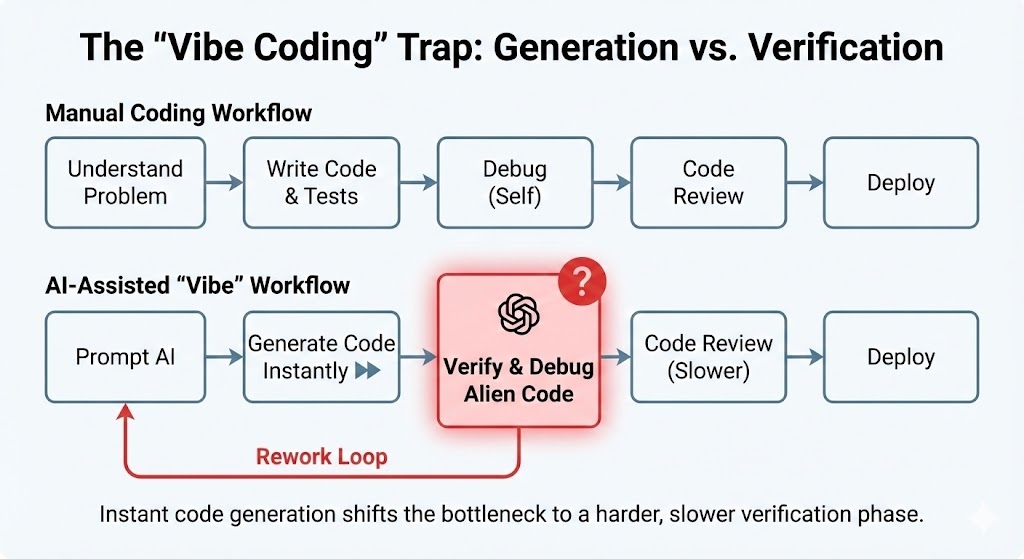

Why? Because we shifted the bottleneck.

- Old Bottleneck: Typing and syntax recall (Generation).

- New Bottleneck: Reading, verifying, and debugging alien logic (Verification).

AI is confident, but frequently wrong. Debugging code you didn’t write, which lacks coherent human intent, is exponentially harder than writing it yourself. We are trading cheap typing time for expensive debugging time.

Expectation vs. Reality

Let’s look at the profit & loss of AI adoption. When we compare vendor promises against enterprise telemetry, the deficit becomes clear.

| Metric | The Sales Pitch (Vendor/Survey Data) | The Site Reality (Forensic/Telemetry Data) | The Structural Cost |

| Velocity | +55% Faster (GitHub Greenfield Study) | -19% Slower on complex maintenance tasks | Latency Spike: Code sits in review/QA longer due to complexity. |

| Quality | “More time for deep work” | +41% Bug Rate in AI-heavy codebases | Rework Loop: Speed in typing is lost to fixing bugs in production. |

| Maintenance | “Clean, efficient code” | Doubled Code Churn & Collapsed Refactoring (<10%) | Technical Inflation: We are building “Write-Only” legacy systems. |

| Security | “Secure by design” | 55% Pass Rate on CWE Top 25 | Risk Injection: Automating the introduction of XSS/SQLi vulnerabilities. |

Table 1: The divergence between perceived value and engineering outcomes (2024-2025 Data).

Structural Integrity is Collapsing (The Rise of AI Debt)

If we analyze the code itself, the picture gets uglier. We are rapidly accumulating a new toxic asset class: AI Debt.

Forensic analysis by GitClear on 211 million lines of code reveals that while volume is up, structural health is crashing.

- Code Churn has Doubled: Lines of code reverted within two weeks of authorship have doubled against pre-AI baselines. We are generating massive amounts of “throwaway” code that doesn’t survive first contact with reality.

- Refactoring has Collapsed: The rate of refactored code plummeted from 25% in 2021 to under 10% in 2024. AI models predict the next token based on patterns; they are biased toward repeating existing mistakes rather than abstracting them.

- Security Vulnerabilities are Baked In: We are automating the injection of flaws. Recent analysis shows extremely high failure rates for basic issues: 86% failure on XSS and 20% failure on SQL Injection.

We are building bloated, repetitive, insecure systems at record speed.

This isn’t an aesthetic issue; it is financial leverage working against your future velocity.

The “Mirror and Multiplier” Effect

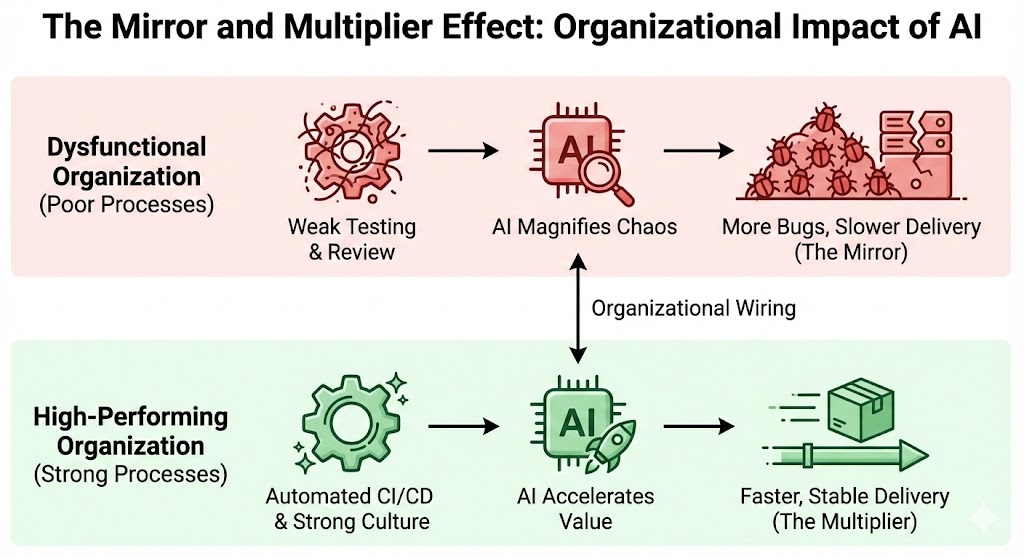

Is AI a net negative? No. It is a power tool. Its impact depends entirely on the discipline of the operator.

The critical insight from the 2025 DORA report is the “Mirror and Multiplier” effect. AI is not magic pixie dust that fixes broken engineering cultures. It is an amplifier.

- The Mirror (Dysfunction): If your organization has chaotic processes, weak testing cultures, and high tolerance for debt, AI will mirror that dysfunction. It helps you generate spaghetti code faster.

- The Multiplier (Excellence): If you have strong “wiring”, robust CI/CD, high-coverage automated testing, and rigorous standards, AI acts as an accelerator. You have the systemic capacity to catch the AI’s mistakes instantly.

You cannot buy a tool to bypass the hard work of building a mature engineering culture.

Real-world Case Studies:

Theory is useless without field data. When we look at real-world deployments in 2024–2025, the data confirms the “Mirror Effect”: the outcome is determined not by the AI model you buy, but by the organizational wiring you plug it into.

Success stories are not about magic, they are about rigorous structural preparation. Failures are almost always failures of governance.

The WINS: Structural Capacity and Targeted Strikes

Mercado Libre

With 9,000+ developers, they reported a 50% reduction in coding time. How? They didn’t just hand out licenses and hope for the best. Their success was predicated on a pre-existing, standardized microservices architecture and strong platform engineering capabilities.

They had the structural capacity to absorb the increased velocity safely. They built the high-speed rail network before buying the bullet train.

Duolingo

Instead of trying to automate complex feature creation, they focused AI on pure toil: regression testing workflows. The result was a 70% reduction in manual testing time, turning hours-long processes into minutes.

This is tactical brilliance. They didn’t accelerate code generation (which increases risk), they accelerated verification (which decreases risk). They used AI to tighten the feedback loop, improving overall system stability.

Pinterest didn’t aim for speed; they aimed for safety. They executed a measured rollout with internal “Safety” checks and built a custom internal platform to govern AI usage before scaling.

They treated AI like an unproven junior engineer. They built guardrails first. They recognized that without governance, speed is just velocity towards a cliff.

The FAILURES: Abdication of Responsibility

The Replit & Air Canada Effect

The industry has seen predictable failures where human-led processes broke down. Replit highlighted instances where unsupervised AI generation led to “negative productivity.” Air Canada faced legal liability when its chatbot hallucinated a policy that the company was forced to honor.

These are not AI failures; they are management failures. “Blind trust” in probabilistic tooling is professional negligence. If you abdicate your responsibility to verify outputs, you deserve the resulting chaos.

Syntax is Commodity, Structure is Leverage

We are exiting the era where syntax was the constraint. Writing code is now a commodity, abundant, cheap, and infinite.

In an economy of infinite syntax, structural judgment is the only scarcity.

The “10x Developer” of the AI era is not the one who generates the most code. It is the one with the discipline to reject the most code. It is the one who understands that every line accepted into the repository is a permanent liability that must be defended against entropy.

The Stabilization Plan

If your team is using AI, you are in production. To prevent the collapse predicted by the data, you must implement a rigorous engineering protocol.

Phase 1: Containment

- Treat AI Code as Untrusted User Input: Stop treating Copilot suggestions as “peer code.” Treat them with the same hostility you treat an external API payload. Implement an “Air Gap” Policy: No AI-generated code merges to the main branch without passing a dedicated SAST.

- Invert Your Metrics Dashboard: Deprecate “Velocity” and “Commit Frequency” as primary KPIs immediately. They are being gamed by inflation.

- Primary Metric: Change Failure Rate. If this rises, AI usage gets throttled.

- Secondary Metric: Code Longevity. Measure how much AI-generated code survives past the 2-week mark. If churn is high, you aren’t building features, you’re prototyping in production.

Phase 2: Reinforcement

- Automated “Refusal to Merge”: You cannot scale code generation without scaling automated rejection.

- Property-Based Testing: Unit tests are no longer enough (AI can write passing unit tests for broken code). Implement property-based testing (fuzzing) to bombard the AI’s logic with edge cases it didn’t anticipate.

- The “Context Check”: Mandate that every PR description includes a “Why” section written by the human, explaining the architectural decision. If the developer cannot explain the intent independent of the syntax, the PR is rejected.

- Debt Repayment Quotas: To combat the collapse in refactoring (down to <10%), enforce a “Boy Scout Rule”. For every feature PR generated with AI, the developer must include a corresponding refactor or cleanup of an existing module. Tie this to mergeability.

Phase 3: Calibration

- The “Senior-to-Junior” Review Ratio: A Senior Engineer can no longer review the same amount of code. The cognitive load of verifying “hallucinated logic” is higher than reviewing human logic.

- Action: Reduce the review load on Seniors by 20% to account for the increased density of AI code. Do not expect them to review faster just because the code was written faster.

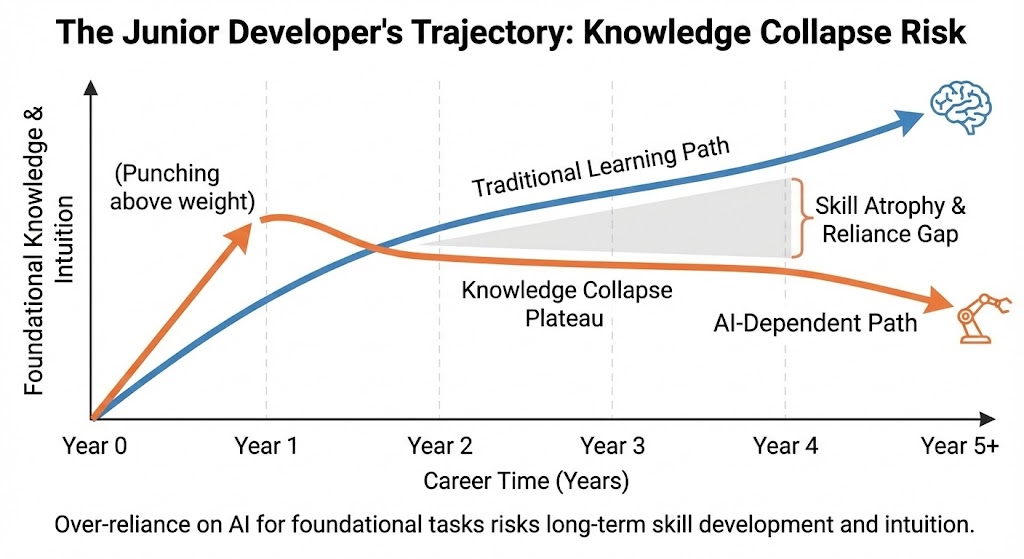

- Mandatory “Analog Weeks” for Juniors: To prevent the “Knowledge Collapse,” institute training periods where Junior engineers must execute tasks without AI assistance. They must prove they understand the memory model and the SQL execution plan before they are allowed to automate it. You cannot automate what you do not understand.

You are not managing a software team anymore; you are managing a nuclear power plant.

The reaction (code generation) is self-sustaining and powerful, but without heavy lead shielding (testing/review) and control rods (governance), it will melt down.

Conclusion

We have officially exited the “Artisan Era” of software development, where code was hand-crafted and scarce. We have entered the Industrial Era, where code is mass-produced and abundant.

In this new reality, the primary danger to your organization is no longer a lack of speed, it is a lack of friction.

Generative AI has removed the friction of writing code, but that friction served a purpose: it gave us time to think. Without it, we are flooding our repositories with “presumed competence”, logic that looks correct but has not earned its place in the system.

The engineering teams that survive this transition will not be the ones who generate the most features. They will be the ones who build the best filtration systems.

The mandate is clear:

- Stop celebrating volume. A large codebase is just a large surface area for bugs.

- Start rewarding skepticism. The most valuable engineer is no longer the fastest typist; it is the one who refuses to merge a pull request because the logic feels “hollow.”

- Shift from Creation to Curation. Your job is no longer to write the code; your job is to certify that the code is safe to run.

The hype is done, now we have to manage the cleanup.

Stop building faster, start building things that don’t fall down.